Overview

I’m a mathematician with a strong interest in applied data science and its interdisciplinary impact. My background enables me to approach complex data-driven problems in a diverse array of fields like computational imaging/signal processing (PhD focus), customer behavior modeling, machine learning, DevOps, and financial risk analysis. I am very passionate about developing data-centric solutions that advance both technology and sustainability. I have outlined my relevant data scientific skills, background and project experiences below.

Skills

Machine Learning and AI: Supervised/Unsupervised learning, Forests, (Deep, Conv) Neural networks, XGBoost, etc.

Data Science: Regressions, Predictive modelling, Topological Data Analysis, Statistical/Stochastic Learning, etc.

Programming Languages: Python, R, SQL, Julia, C++.

Tools: PyTorch, Tensorflow, Scikit-learn, Numpy, Langchain, BI/Tableau.

Certificates: Data Analytics, Adv. Data Analytics (Google), Adv. Learning Algorithms (DeepLearning.ai), AWS.

Data Projects Summary

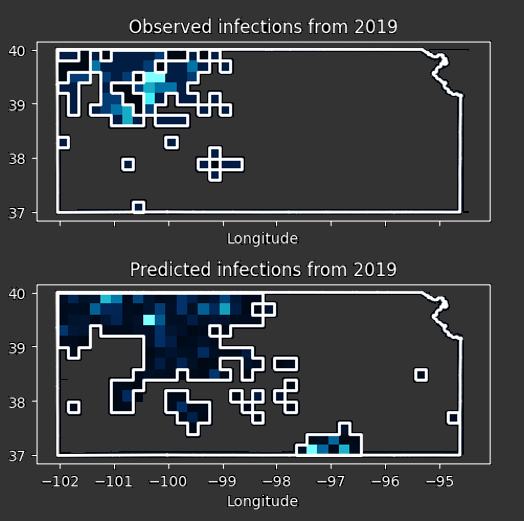

Predicting spatiotemporal dynamics of Chronic Wasting Disease (CWD) in KS

- Link: Manuscript in preparation.

- Goal: Develop a dynamic model to predict presence and spread of CWD in KS from highly unbalanced data.

- Context: Project done as an inter-disciplinary collab. with the dept. of Veterinary Pathobiology and MUIDSI.

- Deliverables:

- Developed a (dynamic) spatiotemporal model capturing CWD movement in KS

- Performed parameter-fine tuning through cross-validation with sampling data

- Performance:

- Max accuracy (fixed time, over space): 90.16%

- Min accuracy (fixed time, over space): 85.11%

- Overall accuracy (across space and time): 88.13%

- Confirmed established trends, identified new migration pathways and suggested intervention measures.

- Derived novel means to quantify the rate and direction of spread of disease

- Developed ways to estimate rates and direction at different scales

- Future work:

- Aggregated KS soil covariate and land cover data and performed PCA to improve model performance

- Esimated improved accuracy after covariate integration $\sim$ 93% [analysis underway]

Predicting passenger survival aboard Titanic - Kaggle competition

- Link: Kaggle notebook

- Goal: Predict passenger survival aboard the Titanic.

- Context: Project done as a part of the Kaggle machine learning competition

- Deliverables:

- Placed in the top 1.3% globally with a bagged random forest model (with conditionally imputed trees)

- Performed EDA and engineered nine new features, five of which were highly correlated with Survival

- Evaluated various models with training accuracy as high as 83.3% under a 10-fold cross validation

- Champion model: Bagged random forest

- Test accuracy: 81.334%

- CV standard deviation: 0.06%

Predicting user churn with Waze data

- Link: GitHub

- Goal: Predict user churn behavior by analyzing data collected from Waze app.

- Data Source: Waze app (via Google)

- Context: Project done as a part of an advanced data analytics certification powered by Google.

- Deliverables:

- EDA findings that indicated churn trends based on distance driven.

- Engineered features that improved predictive power of the champion model.

- Built regression and machine learning models that predicted user churn behavior.

- Champion model: XGB-classifier

- Accuracy: 81%

- Recall: 16.5%

- Champion model: XGB-classifier

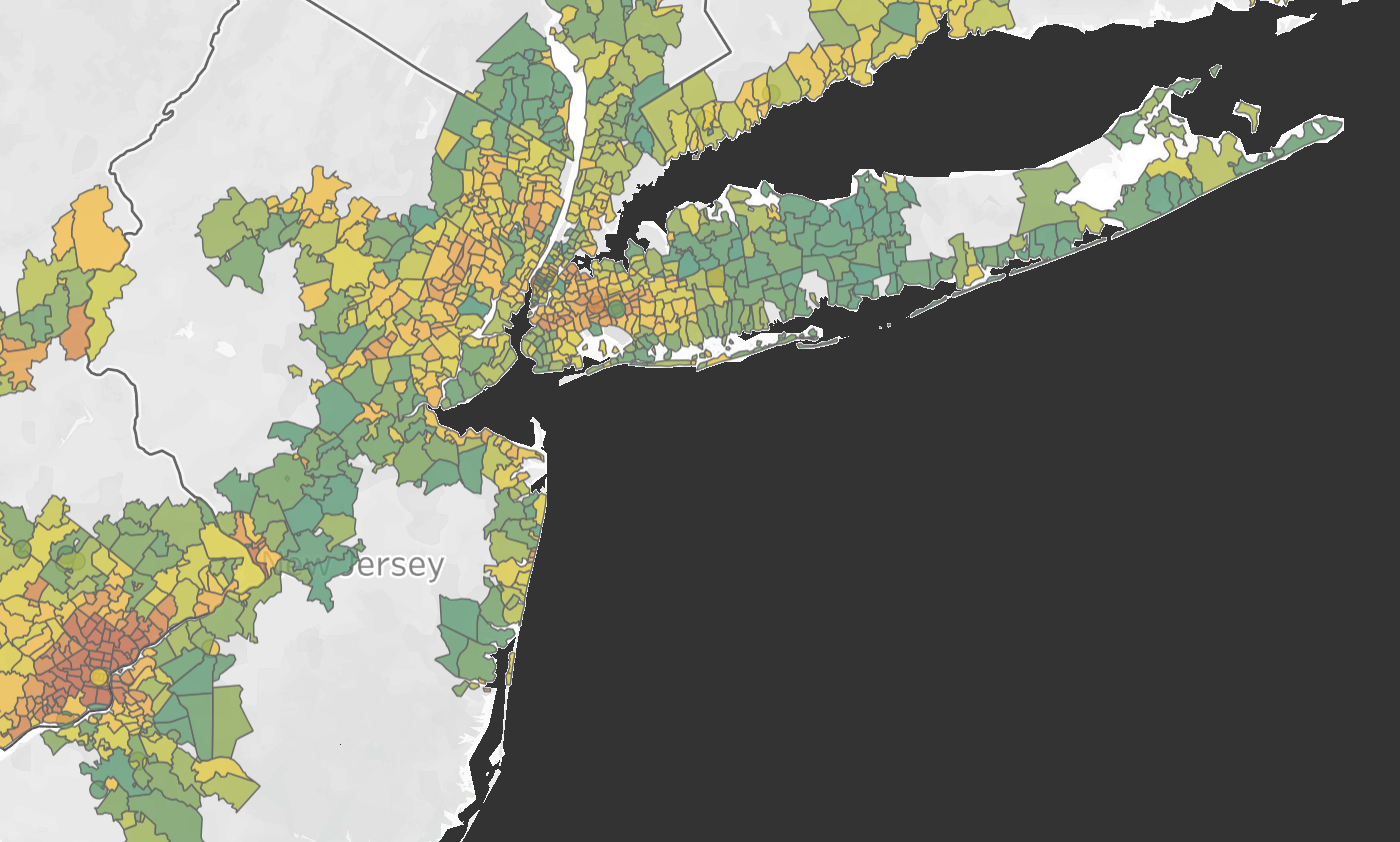

Harvest the sun! - Optimizing solar practicality across mainland US

- Link: Blog post

- Goal: Rank states/zip based on the socio-economic feasibility of installing solar panels to the median house.

- Data source: Project sunroof, Google.

- Context: Project done as a part of a data analytics certification powered by Google.

- Deliverables:

- Engineered three indices that captured unique aspects of solar feasibility.

- Impact index: Amount of CO2 offset per installation.

- Economical index: Short term costs and govt. subsidies for installation.

- Savings index: Long term savings, EB offset from installation.

- Designed a Tableau dashboard to communicate findings.

- Engineered three indices that captured unique aspects of solar feasibility.

Nucleation of market bubbles

- Link: GitHub

- Goal: Adapt physical nucleation theory (JMAK) to the financial sector to predict market bubbles

- Data source: FRED economic data

- Context: Project done as a part of undergraduate senior capstone project

- Deliverables:

- Recontextualized the Avrami-JMAK equations, from physical nucleation theory, to the financial setting.

- Fitted model to 2007 housing data to identify scale-invariant indicators of formation/collapse of bubbles.

- Model RMS error: 12% over prior bubble phases of stocks from selected sectors.

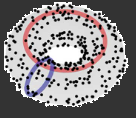

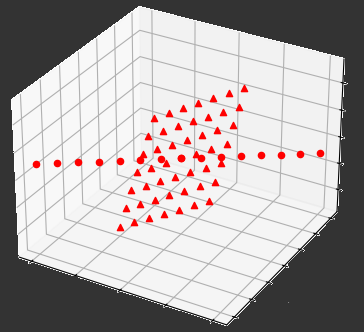

Topological Data Analysis

- Link: Blog posts - Part 1, Part 2, Part 3

- Goal: Build a topological machine learning pipeline that classifies shapes based on topological features.

- Context: Project done as a part of doctoral comprehensive exams on topological data analysis.

- Deliverables:

- Implemented a topological ML pipeline that

- Extracted underlying topological information from point clouds (persistence barcodes).

- Trained a random forest classifier on the topological features.

- Achieved considerable dimension reduction by reducing number of training features from $O(3N)$ to $O(1)$, while retaining competitive performance.

- Out-of-bag accuracy scores:

- Synthetic data: 100%

- Real life data: 82.5% (Source: Princeton computer vision course)

- Implemented a topological ML pipeline that

Robust Subspace Recovery

- Link: GitHub

- Goal: Extract a smaller dimensional subspace that contains “enough” points of a partitioned point cloud $\mathcal{X}$

- Context: Project done as a part of doctoral comprehensive exams on quiver representation theory

- Deliverables:

- Implemented an algorithm that extracted a smaller linear subspace that contains enough points of $\mathcal{X}$

- Furthermore, the extraction was simultaneous in the following sense:

- $\mathcal{X}$ is formed by concatenating various point clouds into a single matrix

- The extracted subspace contains enough points of this concatenation

- Helps mitigate cases where such a recovery is not possible given only a single factor of the concatenation.

ML and programming

Solving mazes and simulating cycles - a saga of genetic algorithm

Link: GitHub - Maze Solver, ODE Parameter Estimator. Blog post - coming soon

Goal: Implement agents guided by the genetic algorithm to

- Solve a randomly generated maze

- Estimate the parameters of a coupled system of Ordinary Differential Equations

Deliverables: Developed agents (with hereditary genes) that

- Solved a randomly generated maze in $O(M*N^2)$ time

- Estimated parameters of the Predator-Prey system within $5$ generations of $5$ agents each.

- Here is a sample run:

- Here is a sample run:

Numeripy - python package

Goal: Develop a python package containing numerical ODE solvers and matrix methods

Deliverables:

numeripy.ODE_solversoffers numerical ODE solvers that offer robust precision control and flexibilitynumeripy.matrix_methodsoffers matrix methods aimed for use in numerical linear algebra tools- In addition,

numeripy.latexit()generates latex formatted tables ready to be pasted into a LaTeX document